Getting started with neural networks

Who we are

We are a group of students from the department of Informatics and Applied Mathematics at Yerevan State University. In 2014, inspired by successes of neural nets in various fields, especially by GoogLeNet’s excellent performance in ImageNet 2014, we decided to dive into the topic of neural networks. We study calculus, combinatorics, graph theory, algebra and many other topics in the university but we learn nothing about machine learning. Just a few students take some ML courses from Coursera or elsewhere.

Choosing a video course

At the beginning of 2015 the Student Scientific Society of the department initiated a project to study neural networks. We had to choose some video course on the internet, then watch and discuss the videos once per week in the university. We wanted a course that would cover everything from the very basics to convolutional networks and deep learning. We followed Yoshua Bengio’s advice given during his interview on Reddit and chose this excellent class by Hugo Larochelle.

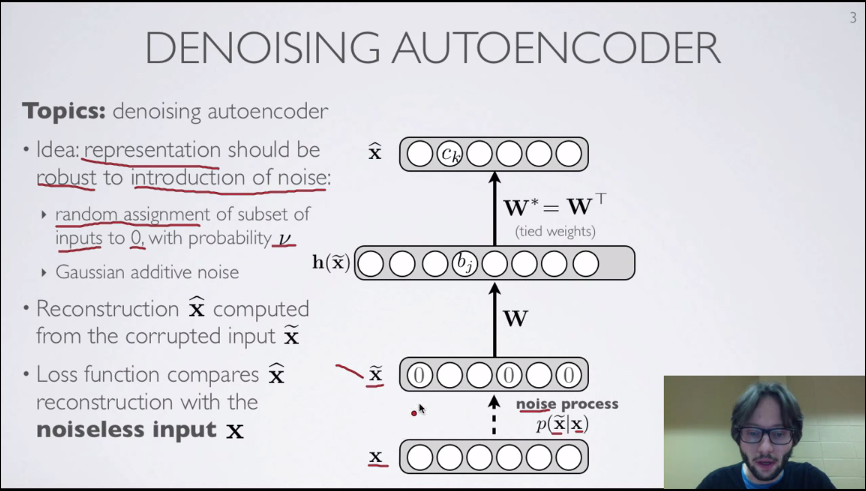

Hugo’s lectures are really great. First two chapters teach the basic structure of neural networks and describe the backpropagation algorithm in details. We loved that he showed the derivation of the gradients of the loss function. Because of this, Hrayr managed to implement a simple multilayer neural net on his own. Next two chapters (which we skipped) talk about Conditional Random Fields. The fifth chapter introduces unsupervised learning with Restricted Boltzmann Machines. This was the hardest part for us, mainly because of our lack of knowledge in probabilistic graphical models. The sixth chapter on autoencoders is our favorite: the magic of denoising autoencoders is very surprising. Then there are chapters on deep learning, another unsupervised learning technique called sparse coding (which we also skipped due to time limits) and computer vision (with strong emphasis on convolutional networks). The last chapter is about natural language processing.

The lectures contain lots of references to papers and demonstrations, the slides are full of visualizations and graphs, and, last but not least, Hugo kindly answers all questions posed in the comments of Youtube videos. After watching the chapter on convolutional networks we decided to apply what we learned on some computer vision contest. We looked at the list of active competitions on Kaggle and the only one related to computer vision was the Diabetic retinopathy detection contest. It seemed to be very hard as a first project in neural nets, but we decided to try. We’ll describe our experience with this contest in the next post.